followings are simple review of paper.

In general, for map/reduce based data warehouse, following requirement needs to be addressed.

Requirement

1. fast loading: able to load large amount data fast.

2. fast query process: query execution should run fast.

3. efficient storage space utilization:

4. strong adaptivity to highly dynamic workload patterns:

To achieve above requirement, there have been 3 major data placement scheme.

1. row-store

cons

- can`t provide fast query execution(full scan on all records, no skip on unnecessary columns)

- can`t get high storage space utilization since it is hard to compress row efficiently

pros

- fast data load

- strong adaptive ability to dynamo

2. column-store

cons

- tuple reconstruction cost lots => query processing slow down

- record reconstruction overhead => since record reside on multiple location in cluster, network cost goes high.

- require pre-knowledge of possible queries for creating column groups

pros

- at query execution time, can avoid reading unnecessary columns.

- high compression ratio

3. PAX store

cons

pros

What RC File provide

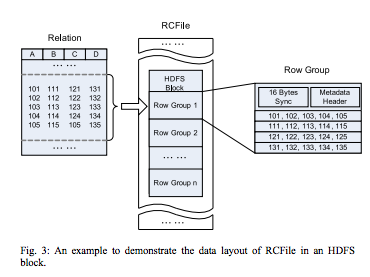

1. horizontally partition rows into multiple row groups

2. column-wise data compression within row group + lazy decompression during query execution

3. flexible row group size, trade off data compression performance query between execution performance

4. guarantees that data in the same row are located in the same node

5. column-wise data compression and skip unnecessary column reads

row group in RC File

1. sync marker: at beginning of the row group

2. metadata header: for row group: # records, each column bytes, each field in each column bytes.

3. table data section: column-store, columnA`s fields, columnB`s field….. sorted order

4. RLE for metadata header

5. each column is compressed independently using heavy-weight Gzip => lazy decompression

future work: automatically select best compression algorithm for each column according to it`s data type and data distribution

TODO: take a look at org.apache.hadoop.hive.ql.io.RCFile and others

No comments:

Post a Comment